Using statistical probability is very useful for visualizing how a process occurs. That is to say, doubling the number of molecules doubles the entropy.

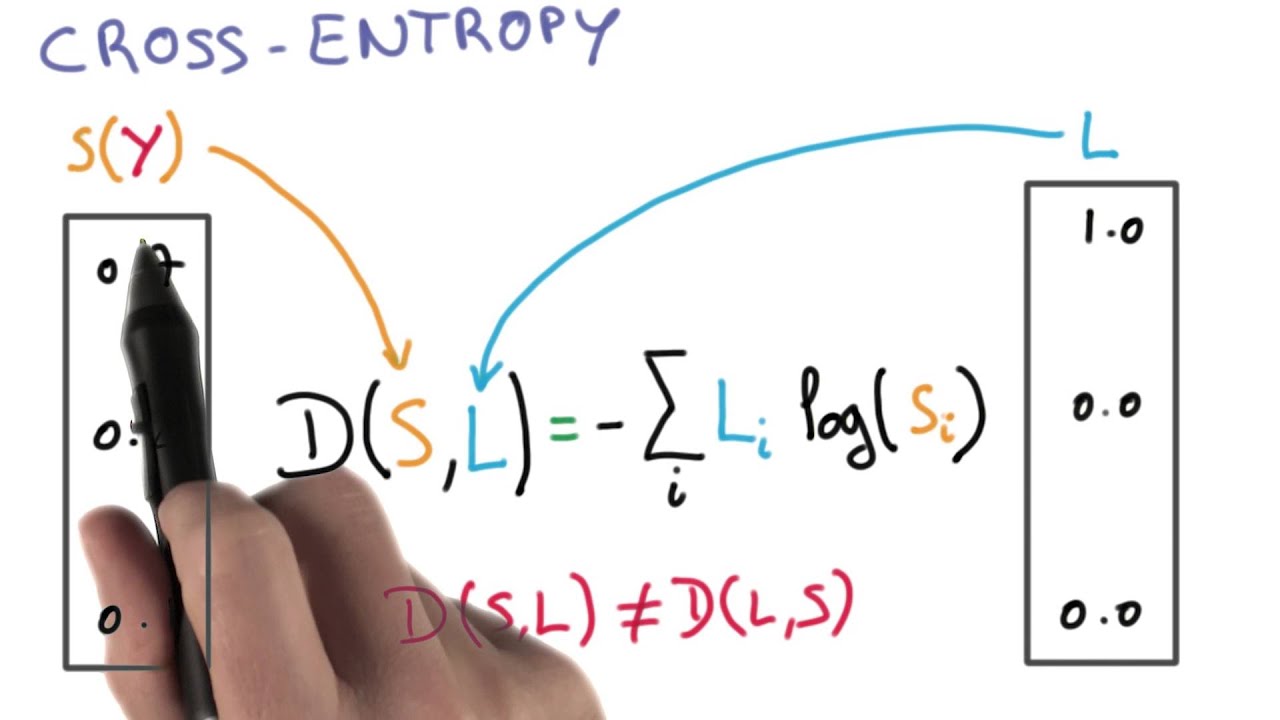

It is clear from this equation that entropy is an extensive property and depends on the number of molecules. The above equation is known as Boltzmann Equation, named after Austrian physicist Ludwig Boltzmann. W: Number of microstates corresponding to a given macrostate The key assumption made here is that each possible outcome is equally probable, leading to the following equation: Using Statistical Probability: Boltzmann Equation It can be quantitatively measured in terms of a system’s statistical probabilities or other thermodynamic quantities.

#Entropy formula how to#

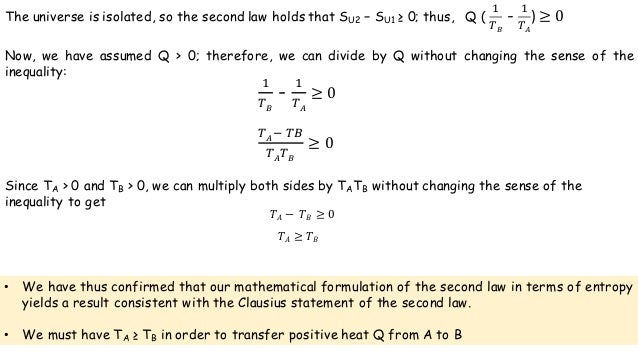

How to Calculate EntropyĮntropy is a qualitative measure of how much the energy of atoms and molecules spreads during a process. These attributes of entropy are essential for formulating the Second Law of Thermodynamics. A positive entropy means an increase in disorder. Generally, the combined entropy of the system and the surrounding for a spontaneous process increases. Entropy and the Second Law of ThermodynamicsĪ system at equilibrium does not undergo an entropy change because no net change is occurring. Entropy is often called the arrow of time because matter tends to move from order to disorder in isolated systems. Since entropy measures disorder, a highly ordered system has low entropy, and a highly disordered one has high entropy. It is an extensive property, meaning entropy depends on the amount of matter.

#Entropy formula free#

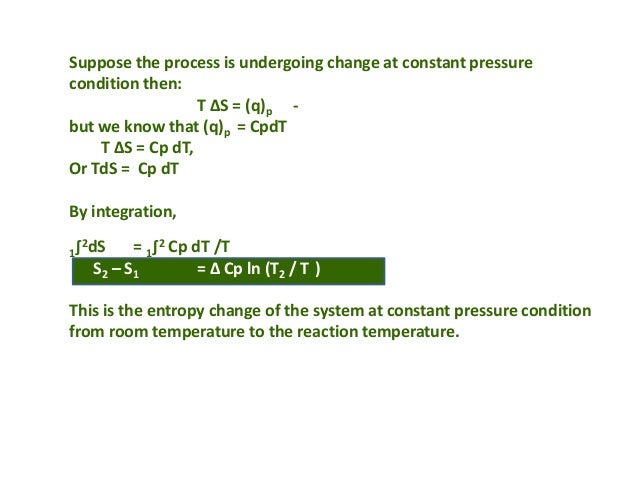

It is also possible to use the free energy of Gibbs (ΔG) and enthalpy (ΔH) to find ΔS. ΣΔS products refers to the sum of the ΔS products,Īnd ΣΔS reactants refers to the sum of the ΔS reactantsģ. Where ΔS rxn refers to the standard entropy values, If the process reaction is known, we can use a table of standard entropy values to find ΔSrxn. When the process occurs at a constant temperature, the entropy would be:Ģ. There are several equations to calculate the entropy:ġ. It contains the system entropy and the entropy of the surroundings. Also, scientists have concluded that the process entropy would increase in a random process. The entropy of the solid (the particles are tightly packed) is more than the gas (particles are free to move). It is the thermodynamic function used to calculate the system's instability and disorder. While reversible adiabatic expansion is isentropic, it is not isentropic to irreversible adiabatic expansion. The process is defined as the quantity of heat generated during the entropy change and is reversibly divided by the absolute temperature.

0 kommentar(er)

0 kommentar(er)